Traffic Sign Classifier

Deep learning traffic sign classification using CNN and LeNet architecture on German Traffic Sign dataset, achieving 92.90% test accuracy.

It is extremely necessary for a self-driving car to perceive all the traffic indications, mostly conveyed through traffic signs and act/ drive accordingly. In this project, I develop an algorithm to classify traffic signs. The project uses German Traffic Sign dataset.

Programming platform and Libraries: Python, OpenCV, Tensorflow, Pandas, Pickle.

Methodology and Results

Dataset

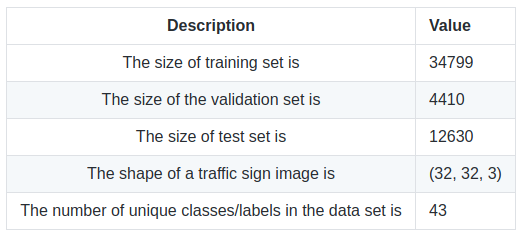

For this project, I used the German Traffic Sign dataset for classification. Following table illustrates the dataset.

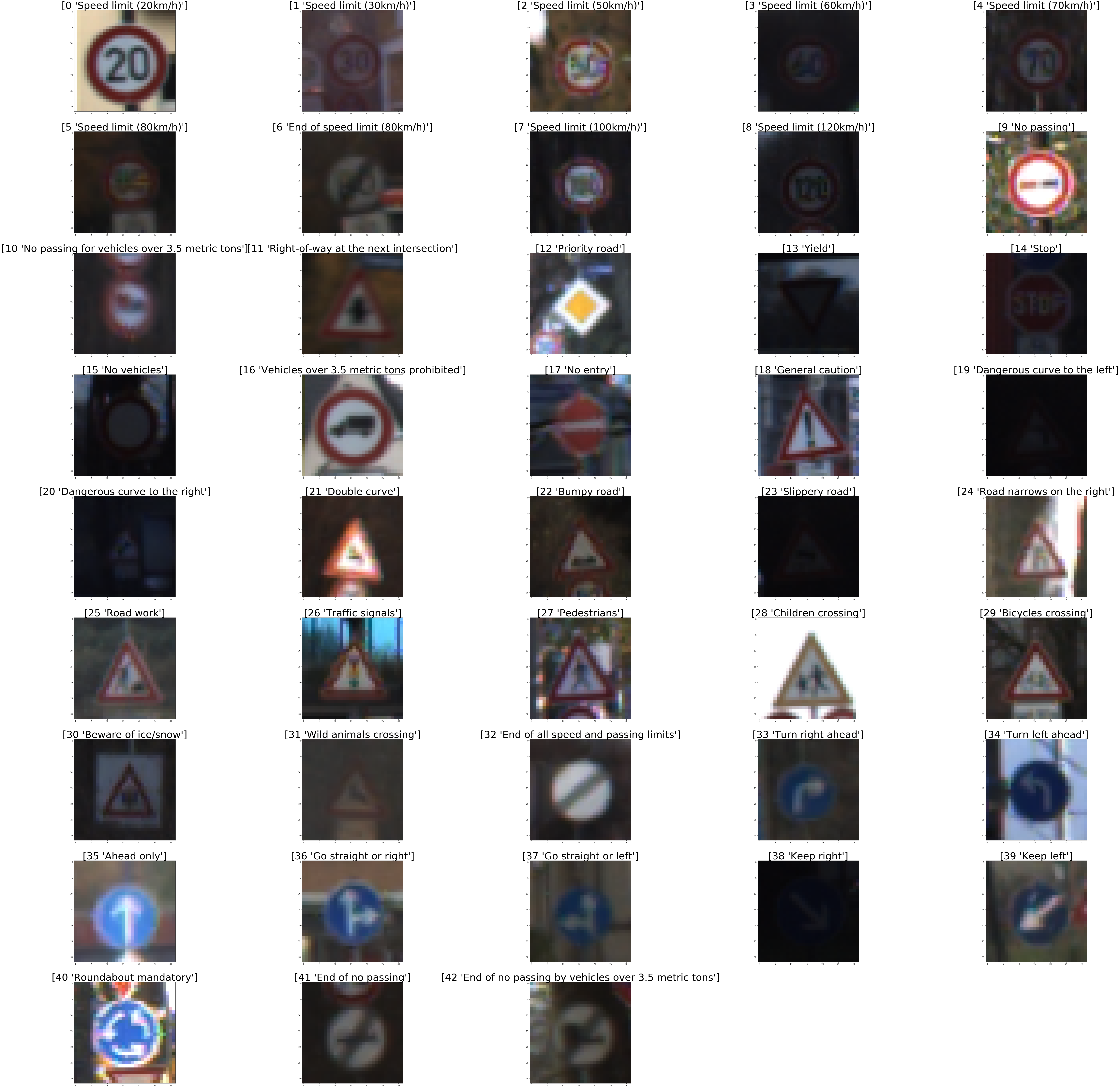

Following are randomly picked images with their labels each from a different class.

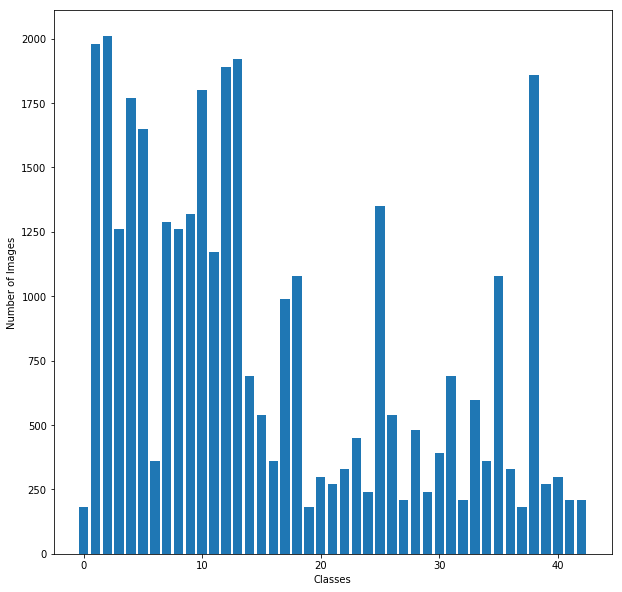

Training Set

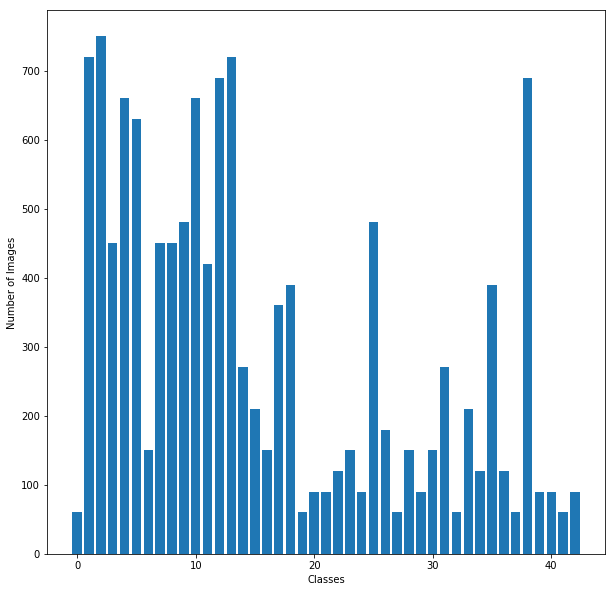

The figure below illustrates number of image samples per class in the training set.

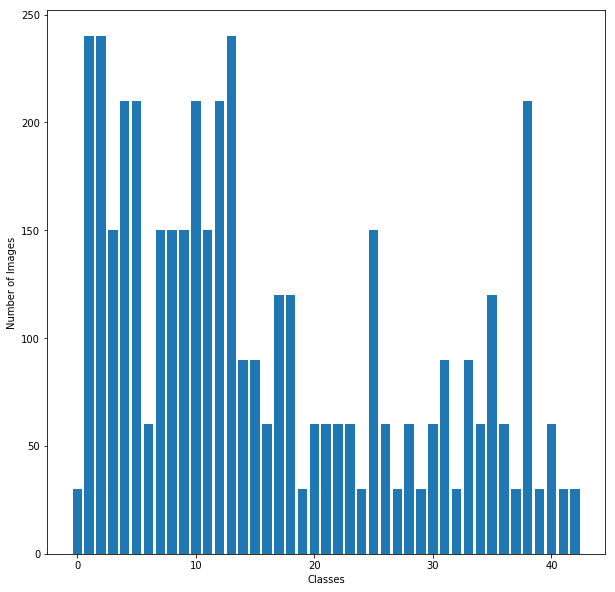

Validation Set

The figure below illustrates number of image samples per class in the validation set.

Testing Set

The figure below illustrates number of image samples per class in the testing set.

Image Pre-processing

The image is first normalized to have pixel values between -1.0 to 1.0 and also have zero mean. The images are trained relatively faster with the normalization. Further, the image is converted to a grayscale color space from RGB color space. This reduces the breadth of the layering. The images are trained in batches and each batch consists of images shuffled to eliminate any bias.

Model Architecture

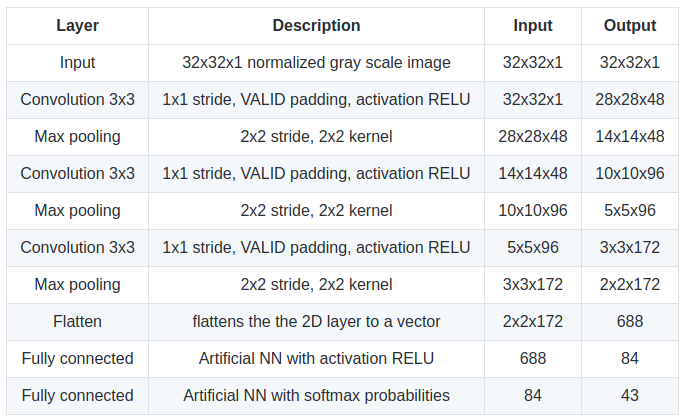

Design

LeNet is a popular classification architecture for digits, traffic signs, etc. My design consists of layers as tabulated below.

Training and Validation

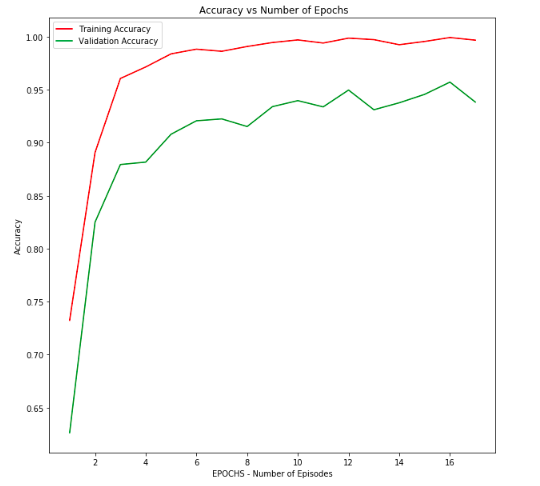

As mentioned earlier the images are trained in batches. EPOCHS or episodes are run with a single batch trained in it. Following are the parameters used for training.

- EPOCHS = 17 - After running 17 epochs there is no significant or no improvement in the accuracy.

- BATCH_SIZE = 128 - I trained the network model on a local CPU and hence preferred a low batch size of 128 images per batch.

- LEARNING RATE = 0.001 - Since Adam optimizer was used, a learning rate of 0.001 is suggested.

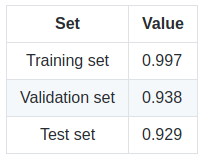

Following are the accuracies each for the training set, the last validation set and the testing set.

The graph below shows a trade off between the training and validation accuracies considering the number of episodes run.

Testing on Unknown Images

I picked the following 5 unknown images for testing.

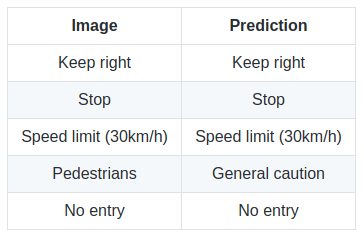

The prediction for the images are tabulated as follows:

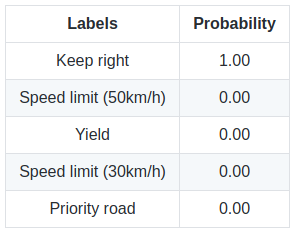

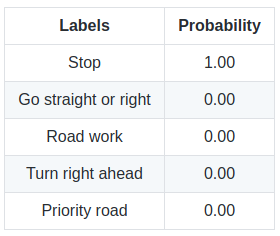

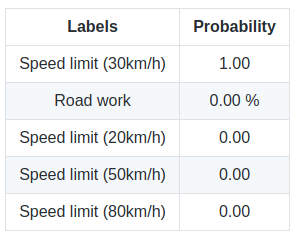

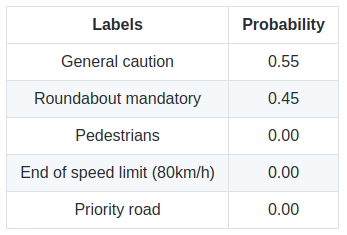

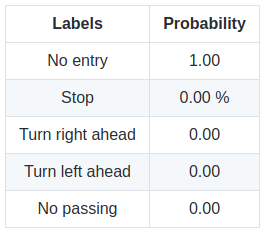

The probabilities for individual labels for each image are as follows:

1. Image 1 - Keep Right

2. Image 2 - Stop

3. Image 3 - Speed limit (30km/h)

4. Image 4 - Pedestrians

5. Image 5 - No entry

Conclusion

This project uses an architecture similar to LeNet for training purpose. An accuracy of 92.90% is obtained for the test set. The accuracy can be improved using batch normalization after every convolutional layer in the neural network model.

Code Repository: GitHub